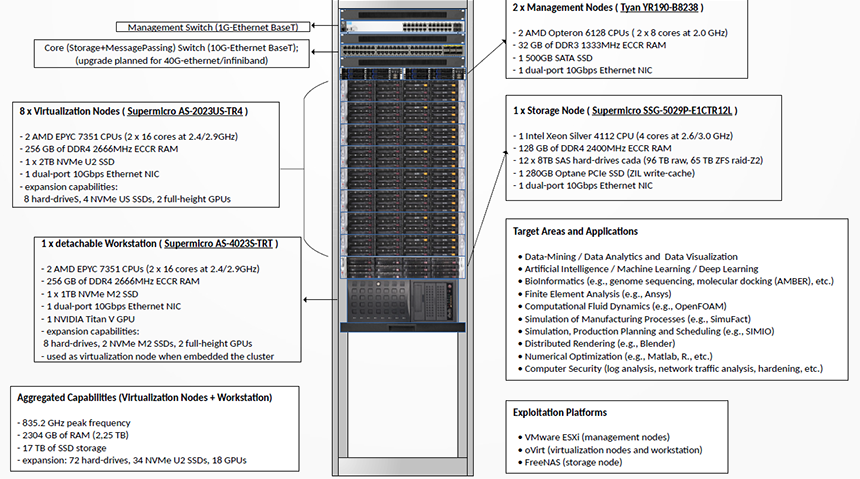

Cluster infrastructure

The cluster infrastructure allows to run highly-complex data analysis applications demanding for high-processing capabilities, namely supporting the employment of Big data techniques and deploying deep learning algorithms. The cluster is being maintained by CeDRI researchers and might be used internally by other research groups or externally by the community.

Starting with nine computing nodes and one storage node, interconnected in 10Gbps Ethernet network, the cluster was designed to support, in the medium term, R&D projects and activities with special needs of computational capacity and storage/handling of large amounts of data. However, since its acquisition, the cluster has undergone several improvements. Thus, it current includes three extra computing nodes and 5 extra GPU-type co-processors. Moreover, the network will be upgraded to 40Gbps Ethernet during 2020.

Today, in aggregate terms, the computing nodes ensure a combined CPU frequency of 1032 GHz (peak value), a total RAM capacity of 2688 GBytes (2,625 TBytes), and 20 TBytes of secondary storage in SSD format. The storage server has an overall hard disk capacity of 96 TBytes (raw capacity). The way in which the cluster systems contribute with resources to the cluster is as follows:

- 9 nodes have, each, i) 2 AMD EPYC 7351 CPUs (totaling 32 cores at 2.9GHz), and ii) 256GB of ECCR DDR4 RAM at 2666MHz;

- 1 node has i) 1 AMD EPYC 7551P CPU (with 32 cores at 2.55GHz), and ii) 128GB of ECCR DDR4 RAM at 2666MHz;

- 2 nodes have, each, i) 1 Intel Xeon W-2195 CPU (with 18 cores at 3.2GHz), and ii) 128GB of ECCR DDR4 RAM at 2666MHz;

- 5 nodes have, each, 1 GPU (1 NVIDIA Titan V and 4 NVIDIA RTX 2080Ti).

- all nodes have i) 1 NVMe SSD for local storage (2TB or 1TB), ii) and 1 10Gbps dual-port Ethernet network card.

In terms of future expansion, all nodes have the capacity to host extra local storage (up to 8 hard drives and up to 3 NVMe SSDs), as well as up to 2 GPUs. As an alternative to the traditional concept of HPC clusters, made of bare-metal systems exclusively dedicated to computation, and operated through a batch management system, the cluster is managed and exploited using an opensource bare-metal virtualization platform (Red Hat oVirt). This platform can support the coexistence of multiple virtual mini-clusters (groups of virtual machines operating in isolated virtual networks, targeting groups of users/researchers with different needs), and with selfservice facilities (via a "a la carte" virtual machine management portal). Currently, the cluster houses typically more than 200 virtual machines, covering Windows and Linux operating systems (both desktop and server), and meeting a diverse range of needs (scientific/research, educational, and even infrastructure).

Cluster diagram